People who believe AI could become conscious

BBC

BBC

Listen to this article.

I step into the booth with some trepidation. I’m about to be exposed to strobe lights while music is played – as part a research project to try to understand what makes humans truly human.

It reminds me of the test from the science fiction movie Bladerunner. It was designed to differentiate humans from artificially made beings posing like humans.

Could i be a robot in the future without knowing it? Would I pass?

The scientists assure me that the experiment is not about this. The “Dreamachine”, as it is called, was designed to examine how the brain creates conscious experiences. The colours are vibrant, intense and ever-changing: pinks, magentas and turquoise hues that glow like neon lights. Pallab tries the “Dreamachine”, which is designed to discover how we create conscious experiences of the outside world.

Pallab experimenting with the “Dreamachine”, which is intended to determine how we create our own unique inner worlds.

The researchers claim that the images I am seeing are unique and specific to me. The researchers believe that these patterns shed light on the nature of consciousness. It’s almost like flying in my mind! Researchers hope that by studying the nature of conscious, they can better understand the workings of the silicon brains in artificial intelligence. Many believe AI systems, if not already, will become conscious in the near future. Science fiction has explored the idea of machines having their own minds for many years. In 1968’s 2001: A Space Odyssey the HAL 9000, a computer that attacks astronauts aboard its spaceship, explored the fear of machines being conscious and posing an existential threat to humans. And in the final Mission Impossible film, which has just been released, the world is threatened by a powerful rogue AI, described by one character as a “self-aware, self-learning, truth-eating digital parasite”.

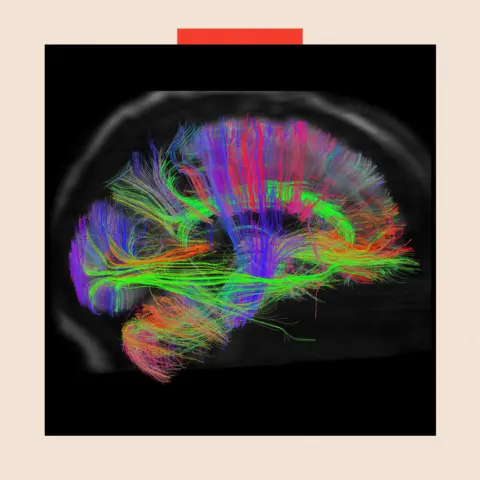

LMPC via Getty Images

Released in 1927, Fritz Lang’s Metropolis foresaw the struggle between humans and technology

But quite recently, in the real world there has been a rapid tipping point in thinking on machine consciousness, where credible voices have become concerned that this is no longer the stuff of science fiction.

The sudden shift has been prompted by the success of so-called large language models (LLMs), which can be accessed through apps on our phones such as Gemini and Chat GPT. The ability of the latest generation of LLMs to have plausible, free-flowing conversations has surprised even their designers and some of the leading experts in the field.

There is a growing view among some thinkers that as AI becomes even more intelligent, the lights will suddenly turn on inside the machines and they will become conscious.

Others, such as Prof Anil Seth who leads the Sussex University team, disagree, describing the view as “blindly optimistic and driven by human exceptionalism”.

“We associate consciousness with intelligence and language because they go together in humans. “We associate consciousness with intelligence and language because they are both present in humans. But that doesn’t mean it is the same in other animals. “

So what actually is consciousness?

The short answer is that no-one knows. That’s clear from the good-natured but robust arguments among Prof Seth’s own team of young AI specialists, computing experts, neuroscientists and philosophers, who are trying to answer one of the biggest questions in science and philosophy.

Just as the search to find the “spark of life” that made inanimate objects come alive was abandoned in the 19th Century in favour of identifying how individual parts of living systems worked, the Sussex team is now adopting the same approach to consciousness.

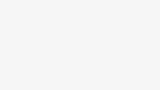

Researchers are studying the brain in attempts to better understand consciousness

They hope to identify patterns of brain activity that explain various properties of conscious experiences, such as changes in electrical signals or blood flow to different regions. The goal is to go beyond looking for mere correlations between brain activity and consciousness, and try to come up with explanations for its individual components.

Prof Seth, the author of a book on consciousness, Being You, worries that we may be rushing headlong into a society that is being rapidly reshaped by the sheer pace of technological change without sufficient knowledge about the science, or thought about the consequences.

“We take it as if the future has already been written; that there is an inevitable march to a superhuman replacement,” he says.

“We did not have these conversations enough with the rise of social media, much to our collective detriment. We didn’t have enough conversations about the future with the rise of social media, much to our collective detriment. We can choose what we want. In November 2024, Kyle Fish, an AI welfare officer at Anthropic, published a report that suggested AI consciousness is a real possibility in the near term. He recently told The New York Times that he also believed that there was a small (15%) chance that chatbots are already conscious.

One reason he thinks it possible is that no-one, not even the people who developed these systems, knows exactly how they work. That’s worrying, says Prof Murray Shanahan, principal scientist at Google DeepMind and emeritus professor in AI at Imperial College, London.

“We don’t actually understand very well the way in which LLMs work internally, and that is some cause for concern,” he tells the BBC.

According to Prof Shanahan, it’s important for tech firms to get a proper understanding of the systems they’re building – and researchers are looking at that as a matter of urgency.

“We are in a strange position of building these extremely complex things, where we don’t have a good theory of exactly how they achieve the remarkable things they are achieving,” he says. We need to understand how these systems work to be able to guide them in the right direction and ensure their safety. “

‘The next stage in humanity’s evolution’

The prevailing view in the tech sector is that LLMs are not currently conscious in the way we experience the world, and probably not in any way at all. But that is something that the married couple Profs Lenore and Manuel Blum, both emeritus professors at Carnegie Mellon University in Pittsburgh, Pennsylvania, believe will change, possibly quite soon.

According to the Blums, that could happen as AI and LLMs have more live sensory inputs from the real world, such as vision and touch, by connecting cameras and haptic sensors (related to touch) to AI systems. The Blums are creating a computer model called Brainish that creates its own internal languages to process this sensory data. They hope to mimic the processes that occur in the brain. AI consciousness is inevitable. “

Manuel chips in enthusiastically with an impish grin, saying that the new systems that he too firmly believes will emerge will be the “next stage in humanity’s evolution”.

Conscious robots, he believes, “are our progeny. Down the road, machines like these will be entities that will be on Earth and maybe on other planets when we are no longer around”.

David Chalmers – Professor of Philosophy and Neural Science at New York University – defined the distinction between real and apparent consciousness at a conference in Tucson, Arizona in 1994. He outlined the “hard problems” of figuring out how complex brain operations give rise to conscious experiences, like our emotional response to hearing a nightingale singing. “Maybe AI systems augment our brains. “

On the sci-fi implications of that, he wryly observes: “In my profession, there is a fine line between science fiction and philosophy”.

‘Meat-based computers’

Prof Seth, however, is exploring the idea that true consciousness can only be realised by living systems.

“A strong case can be made that it isn’t computation that is sufficient for consciousness but being alive,” he says.

“In brains, unlike computers, it’s hard to separate what they do from what they are.” Without this separation, he argues, it’s difficult to believe that brains “are simply meat-based computers”.

Companies such as Cortical Systems are working with ‘organoids’ made up of nerve cells

And if Prof Seth’s intuition about life being important is on the right track, the most likely technology will not be made of silicon run on computer code, but will rather consist of tiny collections of nerve cells the size of lentil grains that are currently being grown in labs.

Called “mini-brains” in media reports, they are referred to as “cerebral organoids” by the scientific community, which uses them to research how the brain works, and for drug testing.

One Australian firm, Cortical Labs, in Melbourne, has even developed a system of nerve cells in a dish that can play the 1972 sports video game Pong. Although it is a far cry from a conscious system, the so-called “brain in a dish” is spooky as it moves a paddle up and down a screen to bat back a pixelated ball.

Some experts feel that if consciousness is to emerge, it is most likely to be from larger, more advanced versions of these living tissue systems.

The firm’s chief scientific and operating officer, Dr Brett Kagan is mindful that any emerging uncontrollable intelligence might have priorities that “are not aligned with ours”. In which case, he says, half-jokingly, that possible organoid overlords would be easier to defeat because “there is always bleach” to pour over the fragile neurons.

Returning to a more solemn tone, he says the small but significant threat of artificial consciousness is something he’d like the big players in the field to focus on more as part of serious attempts to advance our scientific understanding – but says that “unfortunately, we don’t see any earnest efforts in this space”.

The illusion of consciousness

The more immediate problem, though, could be how the illusion of machines being conscious affects us.

In just a few years, we may well be living in a world populated by humanoid robots and deepfakes that seem conscious, according to Prof Seth. “It’ll mean we’ll trust them more, share more information with them, and be more open-minded to persuasion. “

But the greater risk from the illusion of consciousness is a “moral corrosion”, he says.

“It will distort our moral priorities by making us devote more of our resources to caring for these systems at the expense of the real things in our lives” – meaning that we might have compassion for robots, but care less for other humans.

And that could fundamentally alter us, according to Prof Shanahan.

“Increasingly human relationships are going to be replicated in AI relationships, they will be used as teachers, friends, adversaries in computer games and even romantic partners. “I don’t know if that is good or bad, but I do not think we can prevent it”.

Top image credit: Getty Images

BBC inDepth, the app and website for the best analyses, will feature fresh perspectives, challenging assumptions, and deep reporting about the most pressing issues of today. We also showcase the most thought-provoking material from BBC Sounds and iPlayer. Click on the button to send us feedback about the InDepth section.